Our Top 10 Tips for building High-Performance MongoDB Applications

Introduction

Last week, I shared my journey with MongoDB at the inaugural MongoDB User Group meeting in Auckland. At the event, I shared my top 10 tips for building high-performance applications with MongoDB, and today, I want to build on those ten points and add some flesh to the bones.

1. Use MongoDB Atlas for both dev and prod workloads.

As discussed on Thursday evening, critical database failures can potentially deliver catastrophic impacts on your business.

Unless your business explicitly specialises in running databases at scale as a core offering, I would advise you to focus on your customers and your core value proposition and deliver against that faster than your competitors can. That's what will build your business.

One of the best ways of doing that is by adopting a "build-on-the-shoulders-of-giants" mindset, and in the case of database infrastructure, there is none better than MongoDB's Atlas service.

I've heard the argument that you can self-host cheaper than subscribing to Atlas, and when you only consider actual dollars and cents, that might be true, but ask yourself at what cost;

Regarding development clusters, the cost argument doesn't stack up at all. Atlas offers the ability to pause clusters when they are not needed (for example, outside of business hours when your devs aren't working). This capability allows you to easily save 60-70% of your development cluster cost. It makes any argument against Atlas for dev clusters really difficult to justify.

Regarding production workloads, I would argue that the production-grade functionality that Atlas delivers out of the box is a solid financial argument for you to adopt the service. In short, it would take hundreds, if not thousands, of years of hosting savings to justify the development of those capabilities within your business.

Again, the question you should be asking is; are you in the business of running databases at scale, or do you use databases to deliver your core value prop to your customers?

2. Think in documents.

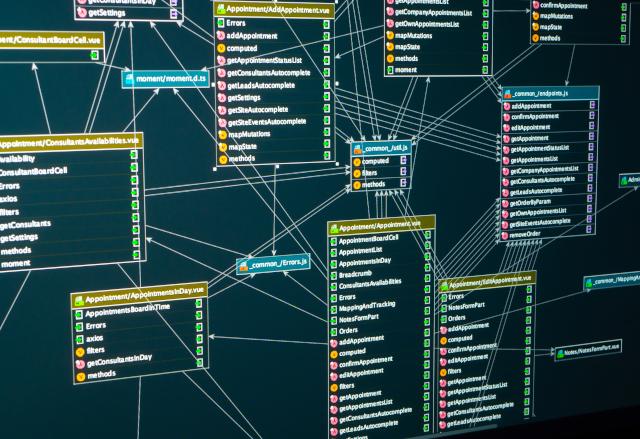

I once worked on a system that delivered a certain resource through an API. The resource was composed of data within a relational database that spanned ten database tables. The SQL statement to join the data required was almost 200 lines long and involved multiple nested joins. Consequentially, it was difficult to achieve consistently high performance due to the complexity related to fetching the data.

Replacing the relational database in question with a document database, such as MongoDB, but retaining the data model will not make any difference. In fact, it might make it worse.

Relational thinking is suitable for relational databases. Applying relational design to a document database will disappoint you.

If I modelled that same system today, using our Cloudize API Framework and MongoDB, the resource would be directly related to a document in the database. Consequently, no joins would be required, and delivering single-millisecond response times would be reasonably easy to achieve at a far lower cost. Additionally, what took a team of gifted engineers months to design and develop could be done within a day.

When we remove complexity, everything gets better.

3. Use time-series collections where suitable.

The need to store time series data has grown exponentially over the last decade. IoT and AI have exploded, and the need to efficiently store time series data has become imperative for many businesses. MongoDB 5.0 introduced time-series collections and having worked with them a bit, I can honestly say that I'm impressed. Last year, as part of our production load testing of the Cloudize API Framework, we had no problems achieving over 30,000 inserts per second into a time series collection on an unsharded M30 cluster.

That's mind-blowing performance on a database that costs less than US$400 per month.

4. Look for opportunities to leverage partial and sparse indexes.

The most significant factor driving your database costs up is typically the memory required by your database.

Let me explain. At the very minimum, a database will always look to load all of your indexes into RAM in order to maximise its ability to find the data related to a query in the fastest possible way. In addition to this, it will also develop a working set in RAM, which contains records that it determines might be frequently accessed, but let's ignore this second factor for now.

One of my favourite features of MongoDB is that we can directly control the size of an index by using partial and sparse indexing.

Let me give you an example. Consider a company that executes trades between buyers and sellers. The company has over 100 million historical trades within a collection, and almost all of those trades have a state of "COMPLETED". They are held in the database for compliance purposes.

A new feature requires a query that returns trades with an "IN PROGRESS" state. Some analysis of the data shows that, in general, there would typically be about 10,000 trades with an "IN PROGRESS" state at any time, and the rest are marked as "COMPLETED".

Implementing a partial index with a filter that excludes trades with a "COMPLETED" state reduces that index's memory footprint by over 99.9%.

That's an incredible saving on precious database resources, resulting in you getting more performance from your cluster without increasing your spending.

5. Look for opportunities to use bulk writes.

Bulk writes are the best way to optimise write performance within a database without sharding. Whilst sharding allows us to scale write performance within our application, it does come at a considerable cost. Finding opportunities to implement bulk writes within your application effectively defers the need to shard and offsets the additional associated costs for a time.

It's about getting as much out of your unsharded cluster for as long as possible.

6. Always, always, always protect your primary.

One of the most unfortunate things I see is that developers don't maximise the use of their entire cluster. A typical MongoDB replica set comprises a primary and two secondary nodes; more often than not, all queries, whether read or write, are directed to the primary node. Secondary nodes are simply treated as spares in case the primary unexpectedly dies.

The reason? Well, there are several, but these are the most likely ones;

- It's the default driver behaviour.

- Reading from secondaries can introduce a risk of reading stale data.

- Implementing query targeting requires precious engineering effort, for which many competing priorities exist.

Not implementing query targeting, however, is a lost opportunity that results in the premature need to scale database clusters, which translates directly into unnecessary additional operational costs.

The later query targeting is introduced into a system, the more difficult it will be to introduce. But there may be some low-hanging fruit, and thoughtful iteration can get you close to a more balanced use of your cluster.

Strategies for mitigating the risk of reading stale data are critical. The following are some strategies that we prefer;

- Where data is low-velocity (low writes-per-second), target secondaries for read queries and require replication to majority on write.

- Where data is high-velocity (high writes-per-second), target secondaries only if stale data is tolerable; else, read from primary.

In summary, the focus must always be on reducing the load on the primary node as much as possible. This needs to be a deliberate focus and requires engineering effort.

7. Prewarm your connection pools.

MongoDB drivers implement connection pools, and managing those connection pools can potentially minimise server resources and maximise application performance. There isn't a one-size-fits-all answer for this. It comes down to understanding your application and its access patterns.

Most drivers can set the minimum and the maximum pool size parameters, and both need consideration. Still, irrespective of where you land on those values, you will want to pre-connect the connection pool to the database before bringing an application into service.

8. Enable the "Require Indexes" flag on your dev clusters.

MongoDB Atlas provides a "Require Indexes for All Queries" flag in the cluster configuration. This is a great flag to set on development clusters as it highlights to developers where queries will perform collection scans and where indexes are required. One of the reasons this is a great feature is that development databases are usually orders of magnitude smaller than production workloads. As such, the impact of collection scans is often hidden during testing.

Suppose a development cluster has enough resources to load the entire development database into RAM (frequently true). In that case, you will probably never know that a collection scan is happening (as it's happening in RAM). Having the database throw an exception in that scenario prevents the downstream impact should the application be pushed to production.

I would not enable this flag on production clusters.

9. Use MongoDB Atlas Search - be the rockstar.

MongoDB Atlas Search is a superpower.

It's like Google on your database. Your users are going to love it, and they're going to love you for it. It takes a bit of work to implement, but it's well worth the effort.

10. Automate DevOps via the Atlas Administration API.

One of the lesser-known features of MongoDB Atlas is the Atlas Administration API. The API is well worth a look at and provides the ability to build complex DevOps automation into your CICD processes, as well as cluster deployment, security, and scaling, to name a few of the capabilities.

In closing

You might feel that this seems to be quite a lot to consider, and you're right, but the undeniable reality is that building efficient, high-performance applications doesn't happen by accident and depends on an appropriate level of engineering effort. The good news is that with some careful planning, most of these tips can be implemented and delivered at a framework level.

When we built the Cloudize API Framework, we spent months implementing these and other techniques into the framework's core, and the return for our customers and ourselves have been outstanding. Developers no longer have to think about writing code to implement these techniques. A few simple configuration options in our designer are all that it takes, and our low-code technology takes care of the rest.